Comparing GraalVM native image with JVM

The following charts look at some applications that are built with both a

traditional JVM and GraalVM native image. As part of the build process they

build a traditional jvm application as a docker image using a base of

amazoncorretto:25-al2023-headless and a second docker image

that uses GraalVM native image with a base of redhat/ubi10-micro:10.0.

The applications are deployed into Kubernetes. To perform the comparison the k8s deployment switches between the two docker images. In this way we are looking to get a reasonable comparison between the two approaches.

For these comparisons:

- Applications using avaje-nima, Helidon SE, ebean orm, postgres

- Java version 25.0.1 and GraalVM version 25.0.1 used

- PGO was not used

- JVM applications used ZGC (or G1), GraalVM using G1

- Memory comparisons focused on RSS and Heap Used

- Native image build time 2m 6s on 8 core github worker

Case: Medium sized application, swapping to Native

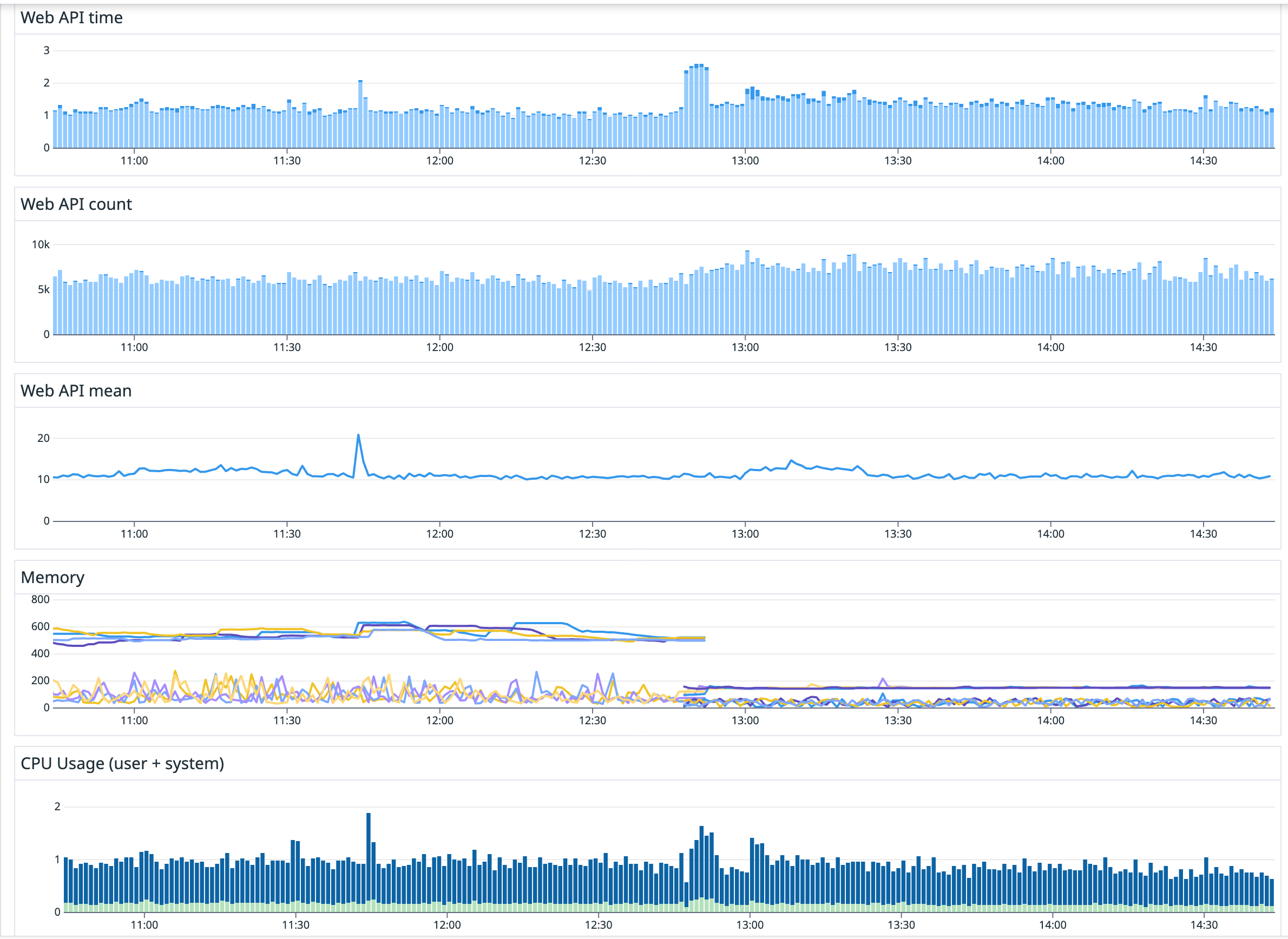

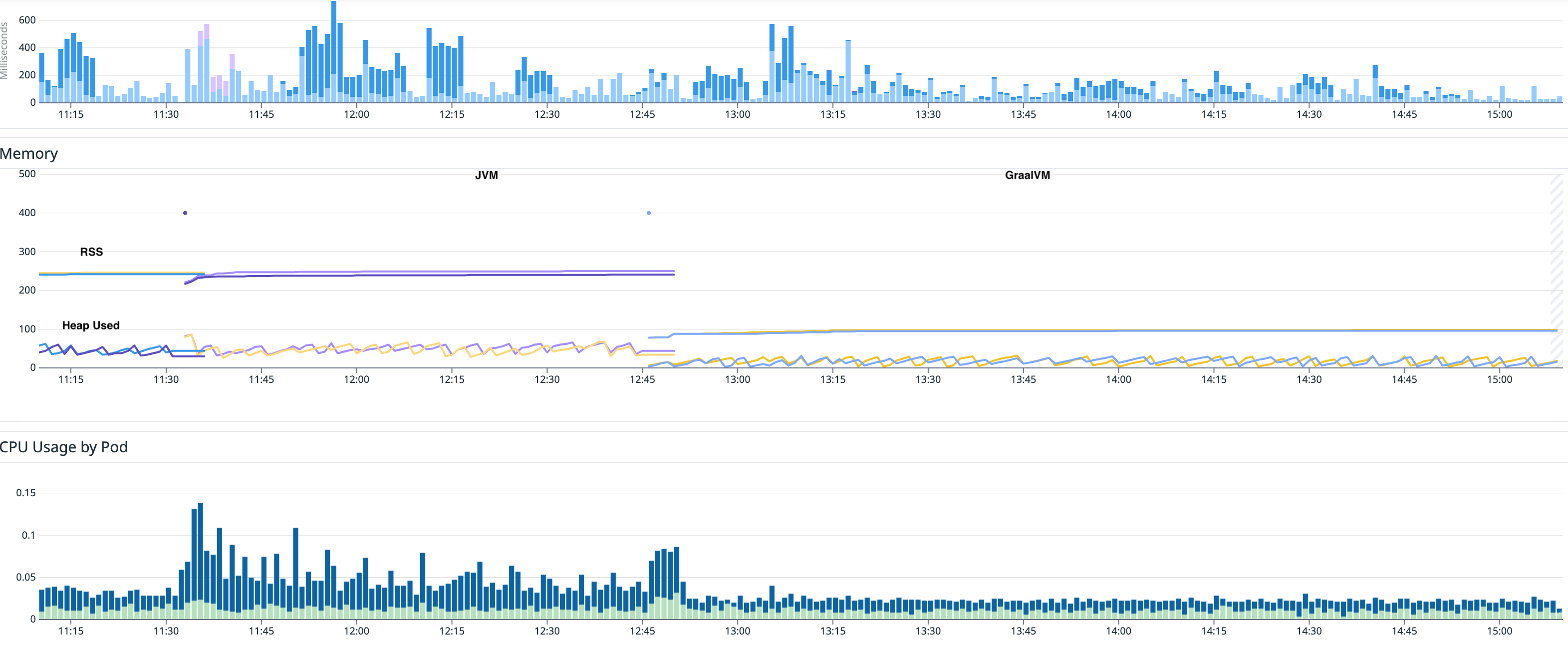

At around 12:45 via a K8s deployment the application swapped from a JVM version to GraalVM native image version. This is production load (not synthetic) and there is variation in the load. We note that just around this deployment time the application saw an increase in requests (from ~12:50 to ~14:25).

Notes:

- RSS Memory: We see a reduction in RSS memory from around ~500Mb to ~180Mb.

- Load spike: From ~12:50 to ~14:25 there was an observable increase in requests/load

- Heap User Memory: JVM using ZGC, GraalVM using G1. With GraalVM we are seeing a flatter Heap Used line

- CPU load: Pretty similar, slightly less CPU consumed with GraalVM

- Mean Latency: Pretty similar, slight adv to JVM

- Max Latency: Not shown but pretty even

- Memory Spikes: This application has some endpoints that not ideal and produce memory spikes that both the JVM and Native versions have to deal with. These spikes are more noticable on the JVM version, it appears that GraalVM/G1 deals with those spikes differently [more aggressive memory allocation and deallocation].

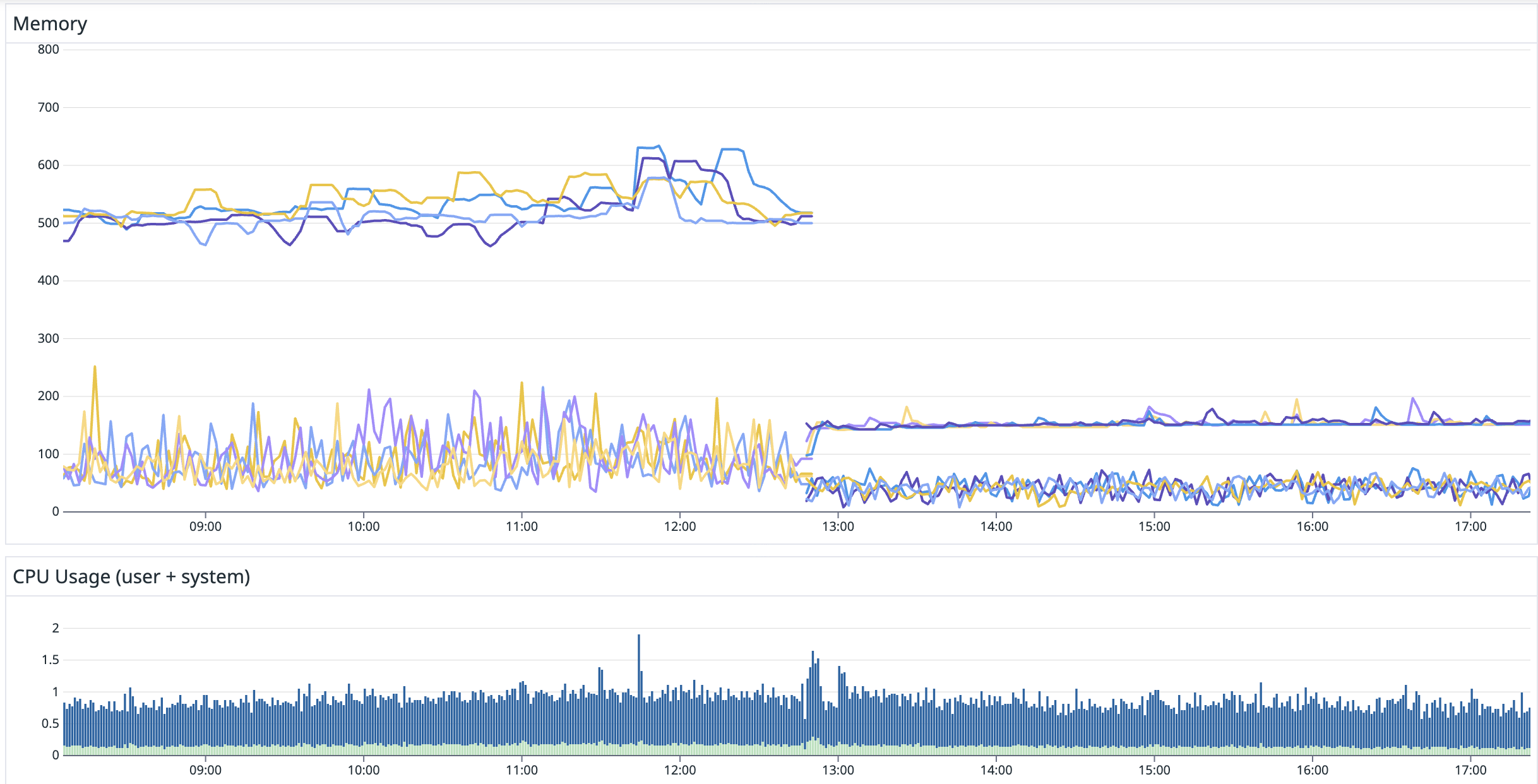

For a closer look at memory, the chart below is showing RSS memory and Heap Used, with 4 pods running in K8s.

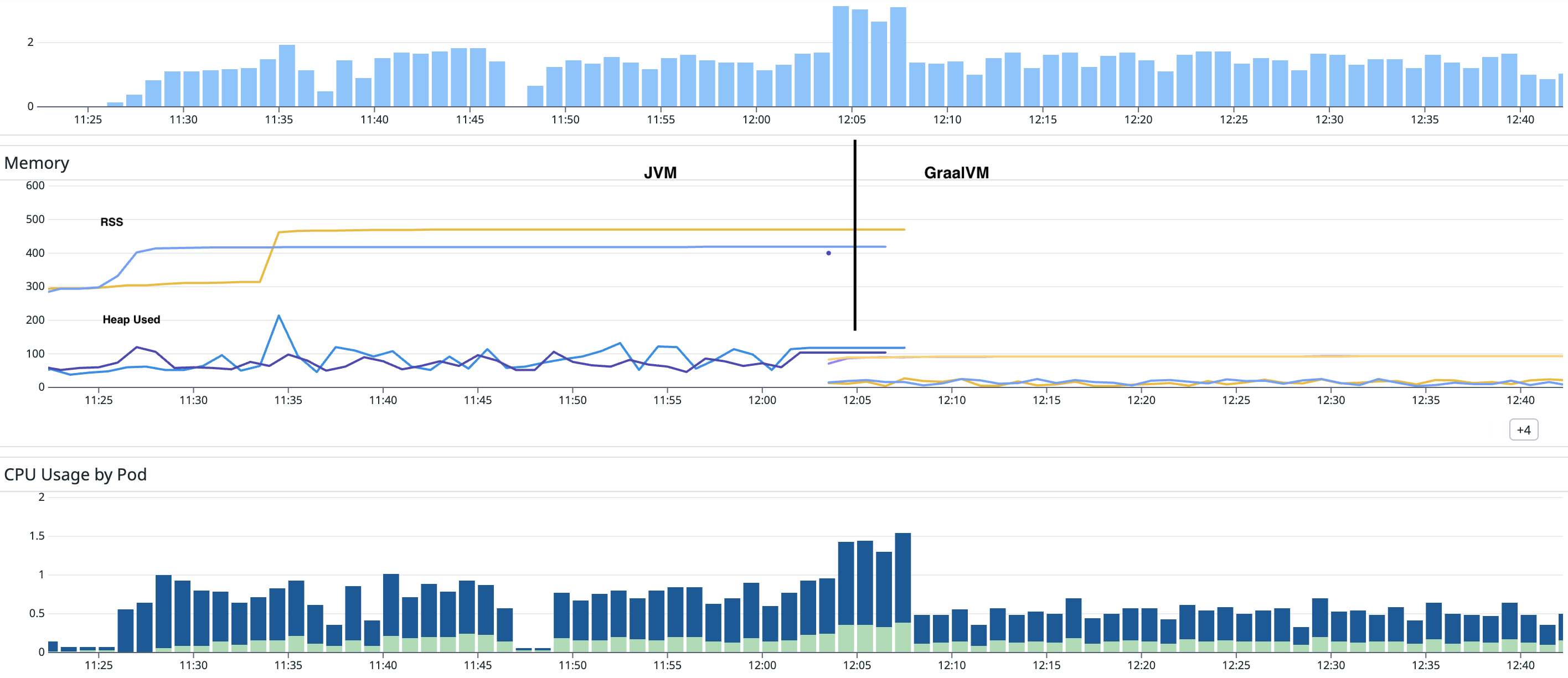

Case: Tiny application, tiny load

This comparison is a tiny Helidon 4 application that uses avaje-nima to provide a single HTTP endpoint that returns a JSON response. The load applied is very light synthetic load, just 10 requests per second.

We swap the k8s deployment between the two docker images to get the comparison between GraalVM native image and traditional JVM.

Notes:

- RSS Memory: We see a reduction in RSS memory from around ~250Mb to ~100Mb.

- Heap User Memory: Both are using G1 with

MaxHeapSize=400m. With GraalVM we are seeing a flatter Heap Used line - CPU Startup: We see CPU spikes at JVM startup. Note the scale which is ~0.3 so this isn't actually that much CPU here, but is looks really significant because (A) there is very little load on this application and (B) Virtual Threads rock

- CPU load: Low for both, Virtual threads rock!

- Mean Latency: ~1ms and pretty even (tiny adv to graalvm)

- Max Latency: Not shown but pretty even

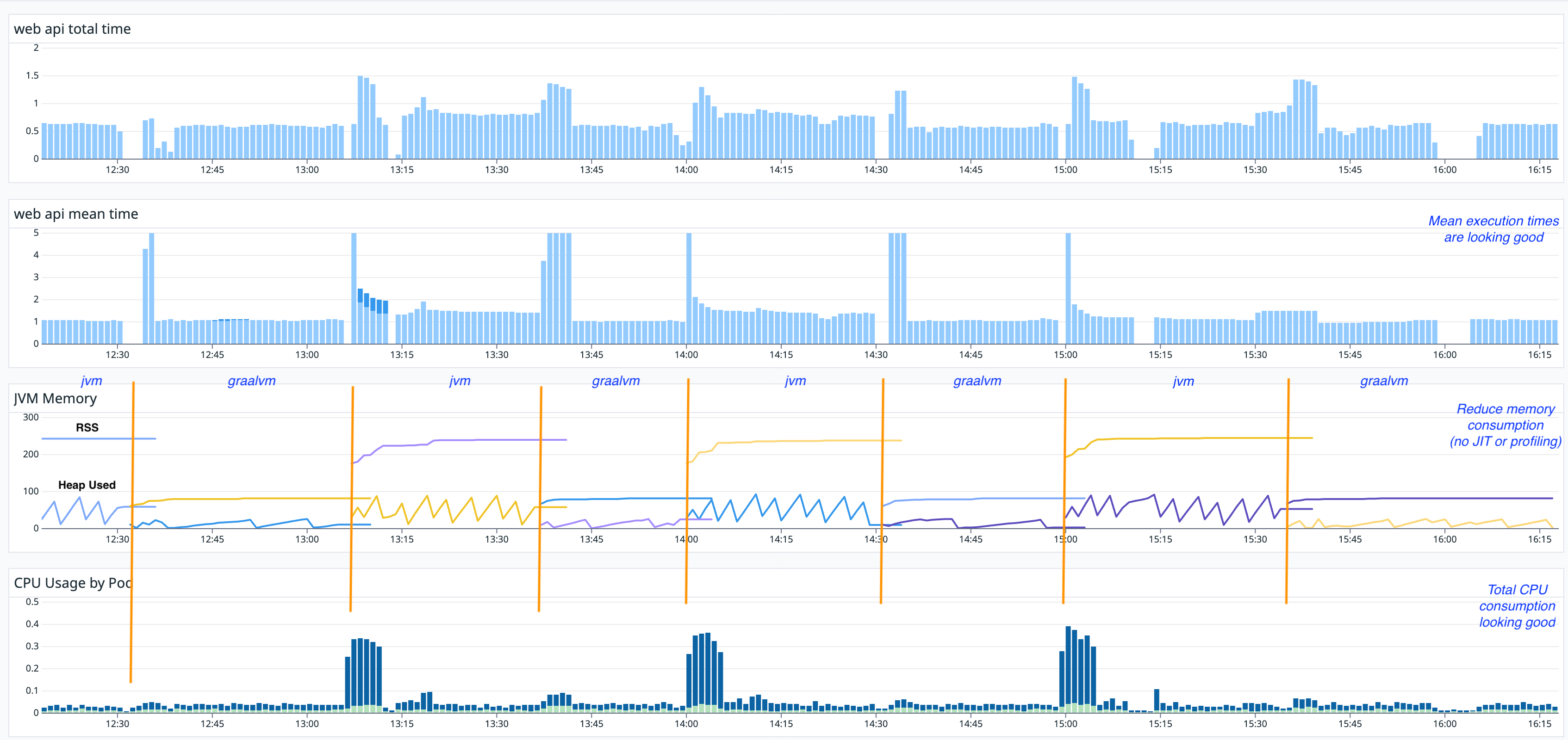

Case: Decent load on heavier endpoint

For this comparison decent synthetic load is applied to an application with a more complex endpoint that has a mean latency around 19ms. A notable difference is that the JVM version is using ZGC rather than G1. The GraalVM version is still using G1.

Notes:

- RSS Memory: Reduction in RSS memory from around ~450Mb to ~100Mb.

- Heap User Memory: GraalVM showing a flatter Heap Used line

- CPU load: GraalVM showing less CPU consumption

- Mean Latency: Not shown but around 19ms for both

- Max Latency: Not shown but pretty even at around 190ms

Case: Interesting optimization for JSON streaming

For this comparison below there is no synthetically generated even load, and instead the application is being used in a more real world manner with varying load. The JVM version is using ZGC rather than G1. The GraalVM version is still using G1.

You can't see this without some other metrics, but the GraalVM version has a better optimization for one of the endpoints that is being used (the medium blue on the first chart). Its mean latency went from ~150ms on the JVM version down to ~45ms on the GraalVM version. Perhaps C2 wasn't quite given even time or profiling data to optimize this endpoint as well as GraalVM did with its AOT optimizations.

This endpoint is a streaming endpoint that returns New Line Delimited JSON (NDJSON) from a Postgres database using Ebean ORM's findStream(). The GraalVM version is significantly outperforming the JVM version for this endpoint.

In general, GraalVM is optimizing well without needing PGO.

Notes:

- RSS Memory: Reduction in RSS memory from around ~250Mb to ~100Mb.

- Heap User Memory: GraalVM showing a flatter Heap Used line

- CPU load: GraalVM showing less CPU consumption

- Mean Latency: A notable drop in latency for a JSON streaming endpoint

General notes

Heap used is flatter?

Not really sure why yet. What we do know is that GraalVM native image includes an optimization for object headers that reduces the memory footprint of objects. This option is also available for the JVM, but it is not enabled for this comparison. GraalVM is also doing some other build time initialization for G1 but I don't know the details.

So not sure why we see the difference there in Heap used yet.

Virtual Threads ROCK!!

Ignoring the application startup, the CPU usage with both the JVM and GraalVM is impressively low. These applications are use Virtual Threads for handling requests and are IO bound (REST services doing mostly Postgres database interaction).

These applications are using Helidon 4 and we can't easily swap back to Platform threads for a more direct comparison, but we can observe the CPU stay impressively low as request load increases.

GraalVM optimizing well (without needing PGO)

JVM with C2 JIT compiler does a good job of optimizing code at runtime based on actual usage patterns. GraalVM native image does AOT (ahead of time) compilation and so doesn't have the same runtime information to optimize code. However GraalVM native image does perform a number of optimizations at build time to produce efficient native code and thus far for these applications it's actually produced code that is just slightly better than C2 (which for me was unexpected).

How is this possible? GraalVM native image does do some static analysis during the build process to identify hot spots in the code and optimize those. This static analysis is based on heuristics rather than actual runtime data, but it seems to be doing a very good job so far.

GraalVM also introduced Machine Learning into its optimization. There is even a "Graal Neural Network" option (which wasn't used for these comparisons) that can be enabled to further improve optimization.

GraalVM optimizing streaming JSON endpoint

There is one interesting observation with a streaming endpoint that returns a stream of data as "New Line Delimited JSON" (NDJSON). In this case the GraalVM native image is performing significantly better than the JVM version.

This endpoint is using Ebean ORM findStream() to return a stream of data from Postgres and using avaje-jsonb to serialize each object to JSON as it is read from the database. It is not clear yet why GraalVM is performing better here, but it may be related to escape analysis and stack allocation optimizations that GraalVM is performing.

The libraries used

The applications used in these comparisons are built with:

- Helidon 4

- avaje-nima

- avaje-inject

- avaje-jsonb

- avaje-config

- avaje-simple-logger

- Postgres JDBC driver

- Ebean ORM

All of these libraries are well suited to GraalVM native image as they avoid using Reflection, Dynamic Proxies and Classpath scanning.

With the http routing and JSON serialization being handled by generated code it is perhaps not that surprising that GraalVM is able to optimize these well.

Ebean ORM uses build time enhancement for entity classes. It also generates the necessary metadata needed for GraalVM native image so that Ebean ORM works well in GraalVM native image applications. Ebean ORM users will be happy to see that GraalVM does a good job of optimizing Ebean ORM usage even without PGO (Profile Guided Optimization).